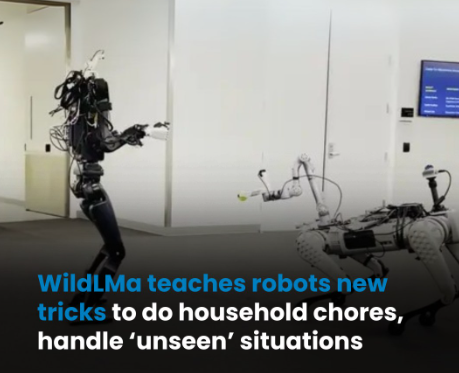

Robotics research is steadily advancing towards a future where robots can be integrated into daily human life, can do mundane tasks, and free up people’s time for more enjoyable activities.

While it may sound like a distant dream, researchers at UC San Diego are bringing this vision closer to reality with their groundbreaking work on WildLMa, a new framework designed to enhance the capabilities of quadruped robots.

“Quadruped robots with manipulators hold promise for extending the workspace and enabling robust locomotion, but existing results do not investigate such a capability,” said the researchers in a paper on the arXiv preprint server.

WildLMa focuses on improving the robots’ ability to perform “loco-manipulation” tasks, which involve manipulating objects while moving around in their environment. It aims to enable robots to not only navigate homes but also pick up clutter, fetch items, and even put away groceries.

“Consider a scenario where a mobile robot is deployed out-of-box at a family house. The robot is tasked with daily chores including collecting the trash around the house and grabbing something for humans,” added the research team.

However, this requires a high level of coordination and adaptability.

Challenges in real-world settings

Traditional methods for training robots often rely on imitation learning, where robots learn by observing and mimicking human demonstrations. While this approach has shown promise in simulated environments, it often falls short when robots are deployed in the “unseen” real world.

The complexity and unpredictability of real-world environments require robots to generalize their learned skills and adapt to new situations. “WildLMa designs three components to address challenges for in-the-wild mobile manipulation,” asserted the team.

First, it utilizes a VR-based teleoperation system, allowing human operators to control the robot’s movements using pre-trained algorithms. This simplifies the process of collecting expert demonstration data, as humans can intuitively guide the robot through various tasks.

Second, WildLMa leverages Large Language Models (LLMs) to break down complex tasks into smaller, more manageable steps. This is akin to how humans approach a problem by dividing it into sub-tasks. By providing the robot with a clear sequence of actions, LLMs enable it to execute long, multi-step tasks efficiently and intuitively.

Third, the framework incorporates attention mechanisms, which allow the robot to focus on target objects while performing tasks. This is crucial for ensuring that the robot remains attentive to its surroundings and avoids distractions.

Training and experiments

The researchers have demonstrated the effectiveness of WildLMa in a series of real-world experiments.

“Besides extensive quantitative evaluation, we qualitatively demonstrate practical robot applications, such as cleaning up trash in university hallways or outdoor terrains, operating articulated objects, and rearranging items on a bookshelf,” highlighted the team.

These experiments showcase the potential of WildLMa to enable robots to perform useful tasks in our everyday lives.

“Our experiments include 20 training sequences with variations in robot positioning, lighting, and object placement in both the training and testing time,” concluded the researchers.